I got a good comment on my previous article about implementing the Game of Life in CUDA pointing out that I was leaving a lot of performance at the table by only considering a single step at once.

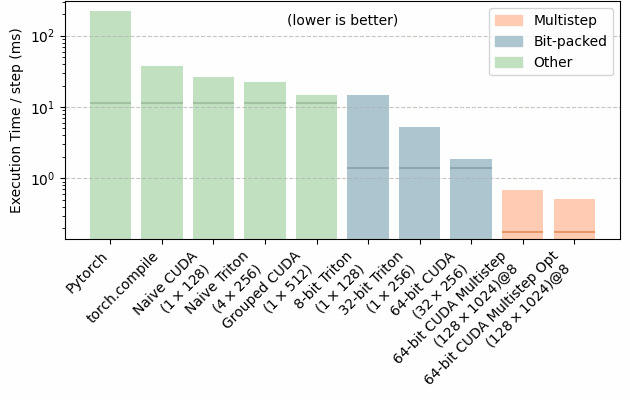

Their point was that my implementations were bound by the speed of DRAM. An A40 can send 696 GB/s from DRAM memory to the cores, and my setup required sending at least one bit in each direction per-cell per-step, which worked out at 1.4ms.

But DRAM is the slowest memory on a graphics card. The L1 cache is hosted inside each Streaming Multiprocessor, much closer to where the calculations can occur. It’s tiny, but has an incredible bandwidth – potentialy 67 TB/s. Fully utilizing this is nigh impossible, but even with mediocre utilization we’d do far better than before.

Continue reading